Speed up Docker image build process of a Rails app

July 25, 2018

tl;dr : We reduced the Docker image building time from 10 minutes to 5 minutes by reusing bundler cache and by precompiling assets.

We deploy one of our Rails applications on a dedicated Kubernetes cluster. Kubernetes is a good fit for us since as per the load and resource consumption, Kubernetes horizontally scales the containerized application automatically. The prerequisite to deploy any kind of application on Kubernetes is that the application needs to be containerized. We use Docker to containerize our application.

We have been successfully containerizing and deploying our Rails application on Kubernetes for about a year now. Although containerization was working fine, we were not happy with the overall time spent to containerize the application whenever we changed the source code and deployed the app.

We use Jenkins for building on-demand Docker images of our application with the help of CloudBees Docker Build and Publish plugin.

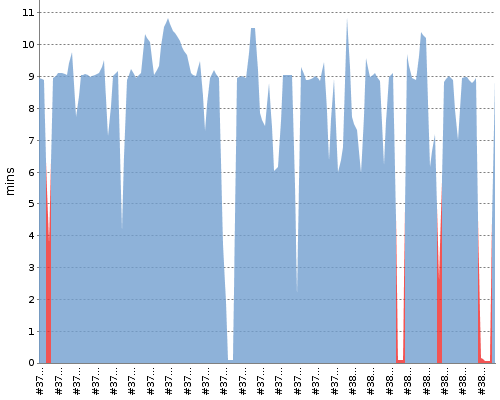

We observed that the average build time of a Jenkins job to build a Docker image was about 9 to 10 minutes.

Investigating what takes most time

We wipe the workspace folder of the Jenkins job after finishing each Jenkins build to avoid any unintentional behavior caused by the residue left from a previous build. The application's folder is about 500 MiB in size. Each Jenkins build spends about 20 seconds to perform a shallow Git clone of the latest commit of the specified git branch from our remote GitHub repository.

After cloning the latest source code, Jenkins executes docker build command to

build a Docker image with a unique tag to containerize the cloned source code of

the application.

Jenkins build spends another 10 seconds invoking docker build command and

sending build context to Docker daemon.

01:05:43 [docker-builder] $ docker build --build-arg RAILS_ENV=production -t bigbinary/xyz:production-role-management-feature-1529436929 --pull=true --file=./Dockerfile /var/lib/jenkins/workspace/docker-builder

01:05:53 Sending build context to Docker daemon 489.4 MB

We use the same Docker image on a number of Kubernetes pods. Therefore, we do

not want to execute bundle install and rake assets:precompile tasks while

starting a container in each pod which would prevent that pod from accepting any

requests until these tasks are finished.

The recommended approach is to run bundle install and rake assets:precompile

tasks while or before containerizing the Rails application.

Following is a trimmed down version of our actual Dockerfile which is used by

docker build command to containerize our application.

FROM bigbinary/xyz-base:latest

ENV APP_PATH /data/app/

WORKDIR $APP_PATH

ADD . $APP_PATH

ARG RAILS_ENV

RUN bin/bundle install --without development test

RUN bin/rake assets:precompile

CMD ["bin/bundle", "exec", "puma"]

The RUN instructions in the above Dockerfile executes bundle install and

rake assets:precompile tasks while building a Docker image. Therefore, when a

Kubernetes pod is created using such a Docker image, Kubernetes pulls the image,

starts a Docker container using that image inside the pod and runs puma server

immediately.

The base Docker image which we use in the FROM instruction contains necessary

system packages. We rarely need to update any system package. Therefore, an

intermediate layer which may have been built previously for that instruction is

reused while executing the docker build command. If the layer for FROM

instruction is reused, Docker reuses cached layers for the next two instructions

such as ENV and WORKDIR respectively since both of them are never changed.

01:05:53 Step 1/8 : FROM bigbinary/xyz-base:latest

01:05:53 latest: Pulling from bigbinary/xyz-base

01:05:53 Digest: sha256:193951cad605d23e38a6016e07c5d4461b742eb2a89a69b614310ebc898796f0

01:05:53 Status: Image is up to date for bigbinary/xyz-base:latest

01:05:53 ---> c2ab738db405

01:05:53 Step 2/8 : ENV APP_PATH /data/app/

01:05:53 ---> Using cache

01:05:53 ---> 5733bc978f19

01:05:53 Step 3/8 : WORKDIR $APP_PATH

01:05:53 ---> Using cache

01:05:53 ---> 0e5fbc868af8

Docker checks contents of the files in the image and calculates checksum for

each file for an ADD instruction. Since source code changes often, the

previously cached layer for the ADD instruction is invalidated due to the

mismatching checksums. Therefore, the 4th instruction ADD in our Dockerfile

has to add the local files in the provided build context to the filesystem of

the image being built in a separate intermediate container instead of reusing

the previously cached instruction layer. On an average, this instruction spends

about 25 seconds.

01:05:53 Step 4/8 : ADD . $APP_PATH

01:06:12 ---> cbb9a6ac297e

01:06:17 Removing intermediate container 99ca98218d99

We need to build Docker images for our application using different Rails

environments. To achieve that, we trigger a

parameterized Jenkins build

by specifying the needed Rails environment parameter. This parameter is then

passed to the docker build command using --build-arg RAILS_ENV=production

option. The ARG instruction in the Dockerfile defines RAILS_ENV variable and

is implicitly used as an environment variable by the rest of the instructions

defined just after that ARG instruction. Even if the previous ADD

instruction didn't invalidate build cache; if the ARG variable is different

from a previous build, then a "cache miss" occurs and the build cache is

invalidated for the subsequent instructions.

01:06:17 Step 5/8 : ARG RAILS_ENV

01:06:17 ---> Running in b793b8cc2fe7

01:06:22 ---> b8a70589e384

01:06:24 Removing intermediate container b793b8cc2fe7

The next two RUN instructions are used to install gems and precompile static

assets using sprockets. As earlier instruction(s) already invalidates the build

cache, these RUN instructions are mostly executed instead of reusing cached

layer. The bundle install command takes about 2.5 minutes and the

rake assets:precompile task takes about 4.35 minutes.

01:06:24 Step 6/8 : RUN bin/bundle install --without development test

01:06:24 ---> Running in a556c7ca842a

01:06:25 bin/bundle install --without development test

01:08:22 ---> 82ab04f1ff42

01:08:40 Removing intermediate container a556c7ca842a

01:08:58 Step 7/8 : RUN bin/rake assets:precompile

01:08:58 ---> Running in b345c73a22c

01:08:58 bin/bundle exec rake assets:precompile

01:09:07 ** Invoke assets:precompile (first_time)

01:09:07 ** Invoke assets:environment (first_time)

01:09:07 ** Execute assets:environment

01:09:07 ** Invoke environment (first_time)

01:09:07 ** Execute environment

01:09:12 ** Execute assets:precompile

01:13:20 ---> 57bf04f3c111

01:13:23 Removing intermediate container b345c73a22c

Above both RUN instructions clearly looks like the main culprit which were

slowing down the whole docker build command and thus the Jenkins build.

The final instruction CMD which starts the puma server takes another 10

seconds. After building the Docker image, the docker push command spends

another minute.

01:13:23 Step 8/8 : CMD ["bin/bundle", "exec", "puma"]

01:13:23 ---> Running in 104967ad1553

01:13:31 ---> 35d2259cdb1d

01:13:34 Removing intermediate container 104967ad1553

01:13:34 [0mSuccessfully built 35d2259cdb1d

01:13:35 [docker-builder] $ docker inspect 35d2259cdb1d

01:13:35 [docker-builder] $ docker push bigbinary/xyz:production-role-management-feature-1529436929

01:13:35 The push refers to a repository [docker.io/bigbinary/xyz]

01:14:21 d67854546d53: Pushed

01:14:22 production-role-management-feature-1529436929: digest: sha256:07f86cfd58fac412a38908d7a7b7d0773c6a2980092df416502d7a5c051910b3 size: 4106

01:14:22 Finished: SUCCESS

So, we found the exact commands which were causing the docker build command to

take so much time to build a Docker image.

Let's summarize the steps involved in building our Docker image and the average time each needed to finish.

| Command or Instruction | Average Time Spent |

|---|---|

| Shallow clone of Git Repository by Jenkins | 20 Seconds |

Invocation of docker build by Jenkins and sending build context to Docker daemon | 10 Seconds |

FROM bigbinary/xyz-base:latest | 0 Seconds |

ENV APP_PATH /data/app/ | 0 Seconds |

WORKDIR $APP_PATH | 0 Seconds |

ADD . $APP_PATH | 25 Seconds |

ARG RAILS_ENV | 7 Seconds |

RUN bin/bundle install --without development test | 2.5 Minutes |

RUN bin/rake assets:precompile | 4.35 Minutes |

CMD ["bin/bundle", "exec", "puma"] | 1.15 Minutes |

| Total | 9 Minutes |

Often, people build Docker images from a single Git branch, like master. Since

changes in a single branch are incremental and hardly has differences in the

Gemfile.lock file across commits, bundler cache need not be managed

explicitly. Instead, Docker automatically reuses the previously built layer for

the RUN bundle install instruction if the Gemfile.lock file remains

unchanged.

In our case, this does not happen. For every new feature or a bug fix, we create

a separate Git branch. To verify the changes on a particular branch, we deploy a

separate review app which serves the code from that branch. To achieve this

workflow, everyday we need to build a lot of Docker images containing source

code from varying Git branches as well as with varying environments. Most of the

times, the Gemfile.lock and assets have different versions across these Git

branches. Therefore, it is hard for Docker to cache layers for bundle install

and rake assets:precompile tasks and reuse those layers during every

docker build command run with different application source code and a

different environment. This is why the previously built Docker layer for the

RUN bin/bundle install instruction and the RUN bin/rake assets:precompile

instruction was often not being used in our case. This reason was causing the

RUN instructions to be executed without reusing the previously built Docker

layer cache while performing every other Docker build.

Before discussing the approaches to speed up our Docker build flow, let's

familiarize with the bundle install and rake assets:precompile tasks and how

to speed up them by reusing cache.

Speeding up "bundle install" by using cache

By default, Bundler installs gems at the location which is set by Rubygems. Also, Bundler looks up for the installed gems at the same location.

This location can be explicitly changed by using --path option.

If Gemfile.lock does not exist or no gem is found at the explicitly provided

location or at the default gem path then bundle install command fetches all

remote sources, resolves dependencies if needed and installs required gems as

per Gemfile.

The bundle install --path=vendor/cache command would install the gems at the

vendor/cache location in the current directory. If the same command is run

without making any change in Gemfile, since the gems were already installed

and cached in vendor/cache, the command will finish instantly because Bundler

need not to fetch any new gems.

The tree structure of vendor/cache directory looks like this.

vendor/cache

├── aasm-4.12.3.gem

├── actioncable-5.1.4.gem

├── activerecord-5.1.4.gem

├── [...]

├── ruby

│ └── 2.4.0

│ ├── bin

│ │ ├── aws.rb

│ │ ├── dotenv

│ │ ├── erubis

│ │ ├── [...]

│ ├── build_info

│ │ └── nokogiri-1.8.1.info

│ ├── bundler

│ │ └── gems

│ │ ├── activeadmin-043ba0c93408

│ │ [...]

│ ├── cache

│ │ ├── aasm-4.12.3.gem

│ │ ├── actioncable-5.1.4.gem

│ │ ├── [...]

│ │ ├── bundler

│ │ │ └── git

│ └── specifications

│ ├── aasm-4.12.3.gemspec

│ ├── actioncable-5.1.4.gemspec

│ ├── activerecord-5.1.4.gemspec

│ ├── [...]

│ [...]

[...]

It appears that Bundler keeps two separate copies of the .gem files at two

different locations, vendor/cache and vendor/cache/ruby/VERSION_HERE/cache.

Therefore, even if we remove a gem in the Gemfile, then that gem will be

removed only from the vendor/cache directory. The

vendor/cache/ruby/VERSION_HERE/cache will still have the cached .gem file

for that removed gem.

Let's see an example.

We have 'aws-sdk', '2.11.88' gem in our Gemfile and that gem is installed.

$ ls vendor/cache/aws-sdk-*

vendor/cache/aws-sdk-2.11.88.gem

vendor/cache/aws-sdk-core-2.11.88.gem

vendor/cache/aws-sdk-resources-2.11.88.gem

$ ls vendor/cache/ruby/2.4.0/cache/aws-sdk-*

vendor/cache/ruby/2.4.0/cache/aws-sdk-2.11.88.gem

vendor/cache/ruby/2.4.0/cache/aws-sdk-core-2.11.88.gem

vendor/cache/ruby/2.4.0/cache/aws-sdk-resources-2.11.88.gem

Now, we will remove the aws-sdk gem from Gemfile and run bundle install.

$ bundle install --path=vendor/cache

Using rake 12.3.0

Using aasm 4.12.3

[...]

Updating files in vendor/cache

Removing outdated .gem files from vendor/cache

* aws-sdk-2.11.88.gem

* jmespath-1.3.1.gem

* aws-sdk-resources-2.11.88.gem

* aws-sdk-core-2.11.88.gem

* aws-sigv4-1.0.2.gem

Bundled gems are installed into `./vendor/cache`

$ ls vendor/cache/aws-sdk-*

no matches found: vendor/cache/aws-sdk-*

$ ls vendor/cache/ruby/2.4.0/cache/aws-sdk-*

vendor/cache/ruby/2.4.0/cache/aws-sdk-2.11.88.gem

vendor/cache/ruby/2.4.0/cache/aws-sdk-core-2.11.88.gem

vendor/cache/ruby/2.4.0/cache/aws-sdk-resources-2.11.88.gem

We can see that the cached version of gem(s) remained unaffected.

If we add the same gem 'aws-sdk', '2.11.88' back to the Gemfile and perform

bundle install, instead of fetching that gem from remote Gem repository,

Bundler will install that gem from the cache.

$ bundle install --path=vendor/cache

Resolving dependencies........

[...]

Using aws-sdk 2.11.88

[...]

Updating files in vendor/cache

* aws-sigv4-1.0.3.gem

* jmespath-1.4.0.gem

* aws-sdk-core-2.11.88.gem

* aws-sdk-resources-2.11.88.gem

* aws-sdk-2.11.88.gem

$ ls vendor/cache/aws-sdk-*

vendor/cache/aws-sdk-2.11.88.gem

vendor/cache/aws-sdk-core-2.11.88.gem

vendor/cache/aws-sdk-resources-2.11.88.gem

What we understand from this is that if we can reuse the explicitly provided

vendor/cache directory every time we need to execute bundle install command,

then the command will be much faster because Bundler will use gems from local

cache instead of fetching from the Internet.

Speeding up "rake assets:precompile" task by using cache

JavaScript code written in TypeScript, Elm, JSX etc cannot be directly served to the browser. Almost all web browsers understands JavaScript (ES4), CSS and image files. Therefore, we need to transpile, compile or convert the source asset into the formats which browsers can understand. In Rails, Sprockets is the most widely used library for managing and compiling assets.

In development environment, Sprockets compiles assets on-the-fly as and when

needed using Sprockets::Server. In production environment, recommended

approach is to pre-compile assets in a directory on disk and serve it using a

web server like Nginx.

Precompilation is a multi-step process for converting a source asset file into a

static and optimized form using components such as processors, transformers,

compressors, directives, environments, a manifest and pipelines with the help of

various gems such as sass-rails, execjs, etc. The assets need to be

precompiled in production so that Sprockets need not resolve inter-dependencies

between required source dependencies every time a static asset is requested. To

understand how Sprockets work in great detail, please read

this guide.

When we compile source assets using rake assets:precompile task, we can find

the compiled assets in public/assets directory inside our Rails application.

$ ls public/assets

manifest-15adda275d6505e4010b95819cf61eb3.json

icons-6250335393ad03df1c67eafe138ab488.eot

icons-6250335393ad03df1c67eafe138ab488.eot.gz

cons-b341bf083c32f9e244d0dea28a763a63.svg

cons-b341bf083c32f9e244d0dea28a763a63.svg.gz

application-8988c56131fcecaf914b22f54359bf20.js

application-8988c56131fcecaf914b22f54359bf20.js.gz

xlsx.full.min-feaaf61b9d67aea9f122309f4e78d5a5.js

xlsx.full.min-feaaf61b9d67aea9f122309f4e78d5a5.js.gz

application-adc697aed7731c864bafaa3319a075b1.css

application-adc697aed7731c864bafaa3319a075b1.css.gz

FontAwesome-42b44fdc9088cae450b47f15fc34c801.otf

FontAwesome-42b44fdc9088cae450b47f15fc34c801.otf.gz

[...]

We can see that the each source asset has been compiled and minified along with its gunzipped version.

Note that the assets have a unique and random digest or fingerprint in their file names. A digest is a hash calculated by Sprockets from the contents of an asset file. If the contents of an asset is changed, then that asset's digest also changes. The digest is mainly used for busting cache so a new version of the same asset can be generated if the source file is modified or the configured cache period is expired.

The rake assets:precompile task also generates a manifest file along with the

precompiled assets. This manifest is used by Sprockets to perform fast lookups

without having to actually compile our assets code.

An example manifest file, in our case

public/assets/manifest-15adda275d6505e4010b95819cf61eb3.json looks like this.

{

"files": {

"application-8988c56131fcecaf914b22f54359bf20.js": {

"logical_path": "application.js",

"mtime": "2018-07-06T07:32:27+00:00",

"size": 3797752,

"digest": "8988c56131fcecaf914b22f54359bf20"

},

"xlsx.full.min-feaaf61b9d67aea9f122309f4e78d5a5.js": {

"logical_path": "xlsx.full.min.js",

"mtime": "2018-07-05T22:06:17+00:00",

"size": 883635,

"digest": "feaaf61b9d67aea9f122309f4e78d5a5"

},

"application-adc697aed7731c864bafaa3319a075b1.css": {

"logical_path": "application.css",

"mtime": "2018-07-06T07:33:12+00:00",

"size": 242611,

"digest": "adc697aed7731c864bafaa3319a075b1"

},

"FontAwesome-42b44fdc9088cae450b47f15fc34c801.otf": {

"logical_path": "FontAwesome.otf",

"mtime": "2018-06-20T06:51:49+00:00",

"size": 134808,

"digest": "42b44fdc9088cae450b47f15fc34c801"

},

[...]

},

"assets": {

"icons.eot": "icons-6250335393ad03df1c67eafe138ab488.eot",

"icons.svg": "icons-b341bf083c32f9e244d0dea28a763a63.svg",

"application.js": "application-8988c56131fcecaf914b22f54359bf20.js",

"xlsx.full.min.js": "xlsx.full.min-feaaf61b9d67aea9f122309f4e78d5a5.js",

"application.css": "application-adc697aed7731c864bafaa3319a075b1.css",

"FontAwesome.otf": "FontAwesome-42b44fdc9088cae450b47f15fc34c801.otf",

[...]

}

}

Using this manifest file, Sprockets can quickly find a fingerprinted file name using that file's logical file name and vice versa.

Also, Sprockets generates cache in binary format at tmp/cache/assets in the

Rails application's folder for the specified Rails environment. Following is an

example tree structure of the tmp/cache/assets directory automatically

generated after executing RAILS_ENV=environment_here rake assets:precompile

command for each Rails environment.

$ cd tmp/cache/assets && tree

.

├── demo

│ ├── sass

│ │ ├── 7de35a15a8ab2f7e131a9a9b42f922a69327805d

│ │ │ ├── application.css.sassc

│ │ │ └── bootstrap.css.sassc

│ │ ├── [...]

│ └── sprockets

│ ├── 002a592d665d92efe998c44adc041bd3

│ ├── 7dd8829031d3067dcf26ffc05abd2bd5

│ └── [...]

├── production

│ ├── sass

│ │ ├── 80d56752e13dda1267c19f4685546798718ad433

│ │ │ ├── application.css.sassc

│ │ │ └── bootstrap.css.sassc

│ │ ├── [...]

│ └── sprockets

│ ├── 143f5a036c623fa60d73a44d8e5b31e7

│ ├── 31ae46e77932002ed3879baa6e195507

│ └── [...]

└── staging

├── sass

│ ├── 2101b41985597d41f1e52b280a62cd0786f2ee51

│ │ ├── application.css.sassc

│ │ └── bootstrap.css.sassc

│ ├── [...]

└── sprockets

├── 2c154d4604d873c6b7a95db6a7d5787a

├── 3ae685d6f922c0e3acea4bbfde7e7466

└── [...]

Let's inspect the contents of an example cached file. Since the cached file is

in binary form, we can forcefully see the non-visible control characters as well

as the binary content in text form using cat -v command.

$ cat -v tmp/cache/assets/staging/sprockets/2c154d4604d873c6b7a95db6a7d5787a

^D^H{^QI"

class^F:^FETI"^SProcessedAsset^F;^@FI"^Qlogical_path^F;^@TI"^]components/Comparator.js^F;^@TI"^Mpathname^F;^@TI"T$root/app/assets/javascripts/components/Comparator.jsx^F;^@FI"^Qcontent_type^F;^@TI"^[application/javascript^F;^@TI"

mtime^F;^@Tl+^GM-gM-z;[I"^Klength^F;^@Ti^BM-L^BI"^Kdigest^F;^@TI"%18138d01fe4c61bbbfeac6d856648ec9^F;^@FI"^Ksource^F;^@TI"^BM-L^Bvar Comparator = function (props) {

var comparatorOptions = [React.createElement("option", { key: "?", value: "?" })];

var allComparators = props.metaData.comparators;

var fieldDataType = props.fieldDataType;

var allowedComparators = allComparators[fieldDataType] || allComparators.integer;

return React.createElement(

"select",

{

id: "comparator-" + props.id,

disabled: props.disabled,

onChange: props.handleComparatorChange,

value: props.comparatorValue },

comparatorOptions.concat(allowedComparators.map(function (comparator, id) {

return React.createElement(

"option",

{ key: id, value: comparator },

comparator

);

}))

);

};^F;^@TI"^Vdependency_digest^F;^@TI"%d6c86298311aa7996dd6b5389f45949f^F;^@FI"^Srequired_paths^F;^@T[^FI"T$root/app/assets/javascripts/components/Comparator.jsx^F;^@FI"^Udependency_paths^F;^@T[^F{^HI" path^F;^@TI"T$root/app/assets/javascripts/components/Comparator.jsx^F;^@F@^NI"^^2018-07-03T22:38:31+00:00^F;^@T@^QI"%51ab9ceec309501fc13051c173b0324f^F;^@FI"^M_version^F;^@TI"%30fd133466109a42c8cede9d119c3992^F;^@F

We can see that there are some weird looking characters in the above file

because it is not a regular file to be read by humans. Also, it seems to be

holding some important information such as mime-type, original source code's

path, compiled source, digest, paths and digests of required dependencies, etc.

Above compiled cache appears to be of the original source file located at

app/assets/javascripts/components/Comparator.jsx having actual contents in JSX

and ES6 syntax as shown below.

const Comparator = props => {

const comparatorOptions = [<option key="?" value="?" />];

const allComparators = props.metaData.comparators;

const fieldDataType = props.fieldDataType;

const allowedComparators =

allComparators[fieldDataType] || allComparators.integer;

return (

<select

id={`comparator-${props.id}`}

disabled={props.disabled}

onChange={props.handleComparatorChange}

value={props.comparatorValue}

>

{comparatorOptions.concat(

allowedComparators.map((comparator, id) => (

<option key={id} value={comparator}>

{comparator}

</option>

))

)}

</select>

);

};

If similar cache exists for a Rails environment under tmp/cache/assets and if

no source asset file is modified then re-running the rake assets:precompile

task for the same environment will finish quickly. This is because Sprockets

will reuse the cache and therefore will need not to resolve the inter-assets

dependencies, perform conversion, etc.

Even if certain source assets are modified, Sprockets will rebuild the cache and re-generate compiled and fingerprinted assets just for the modified source assets.

Therefore, now we can understand that that if we can reuse the directories

tmp/cache/assets and public/assets every time we need to execute

rake assets:precompile task, then the Sprockets will perform precompilation

much faster.

Speeding up "docker build" -- first attempt

As discussed above, we were now familiar about how to speed up the

bundle install and rake assets:precompile commands individually.

We decided to use this knowledge to speed up our slow docker build command.

Our initial thought was to mount a directory on the host Jenkins machine into

the filesystem of the image being built by the docker build command. This

mounted directory then can be used as a cache directory to persist the cache

files of both bundle install and rake assets:precompile commands run as part

of docker build command in each Jenkins build. Then every new build could

reuse the previous build's cache and therefore could finish faster.

Unfortunately, this wasn't possible due to no support from Docker yet. Unlike

the docker run command, we cannot mount a host directory into docker build

command. A feature request for providing a shared host machine directory path

option to the docker build command is still

open here.

To reuse cache and perform faster, we need to carry the cache files of both

bundle install and rake assets:precompile commands between each

docker build (therefore, Jenkins build). We were looking for some place which

can be treated as a shared cache location and can be accessed during each build.

We decided to use Amazon's S3 service to solve this problem.

To upload and download files from S3, we needed to inject credentials for S3

into the build context provided to the docker build command.

Alternatively, these S3 credentials can be provided to the docker build

command using --build-arg option as discussed earlier.

We used s3cmd command-line utility to interact with the S3 service.

Following shell script named as install_gems_and_precompile_assets.sh was

configured to be executed using a RUN instruction while running the

docker build command.

set -ex

# Step 1.

if [ -e s3cfg ]; then mv s3cfg ~/.s3cfg; fi

bundler_cache_path="vendor/cache"

assets_cache_path="tmp/assets/cache"

precompiled_assets_path="public/assets"

cache_archive_name="cache.tar.gz"

s3_bucket_path="s3://docker-builder-bundler-and-assets-cache"

s3_cache_archive_path="$s3_bucket_path/$cache_archive_name"

# Step 2.

# Fetch tarball archive containing cache and extract it.

# The "tar" command extracts the archive into "vendor/cache",

# "tmp/assets/cache" and "public/assets".

if s3cmd get $s3_cache_archive_path; then

tar -xzf $cache_archive_name && rm -f $cache_archive_name

fi

# Step 3.

# Install gems from "vendor/cache" and pack up them.

bin/bundle install --without development test --path $bundler_cache_path

bin/bundle pack --quiet

# Step 4.

# Precompile assets.

# Note that the "RAILS_ENV" is already defined in Dockerfile

# and will be used implicitly.

bin/rake assets:precompile

# Step 5.

# Compress "vendor/cache", "tmp/assets/cache"

# and "public/assets" directories into a tarball archive.

tar -zcf $cache_archive_name $bundler_cache_path \

$assets_cache_path \

$precompiled_assets_path

# Step 6.

# Push the compressed archive containing updated cache to S3.

s3cmd put $cache_archive_name $s3_cache_archive_path || true

# Step 7.

rm -f $cache_archive_name ~/.s3cfg

Let's discuss the various steps annotated in the above script.

- The S3 credentials file injected by Jenkins into the build context needs to

be placed at

~/.s3cfglocation, so we move that credentials file accordingly. - Try to fetch the compressed tarball archive comprising directories such as

vendor/cache,tmp/assets/cacheandpublic/assets. If exists, extract the tarball archive at respective paths and remove that tarball. - Execute the

bundle installcommand which would reuse the extracted cache fromvendor/cache. - Execute the

rake assets:precompilecommand which would reuse the extracted cache fromtmp/assets/cacheandpublic/assets. - Compress the cache directories

vendor/cache,tmp/assets/cacheandpublic/assetsin a tarball archive. - Upload the compressed tarball archive containing updated cache directories to S3.

- Remove the compressed tarball archive and the S3 credentials file.

Please note that, in our actual case we had generated different tarball archives

depending upon the provided RAILS_ENV environment. For demonstration, here we

use just a single archive instead.

The Dockerfile needed to update to execute the

install_gems_and_precompile_assets.sh script.

FROM bigbinary/xyz-base:latest

ENV APP_PATH /data/app/

WORKDIR $APP_PATH

ADD . $APP_PATH

ARG RAILS_ENV

RUN install_gems_and_precompile_assets.sh

CMD ["bin/bundle", "exec", "puma"]

With this setup, average time of the Jenkins builds was now reduced to about 5 minutes. This was a great achievement for us.

We reviewed this approach in a great detail. We found that although the approach

was working fine, there was a major security flaw. It is not at all recommended

to inject confidential information such as login credentials, private keys, etc.

as part of the build context or using build arguments while building a Docker

image using docker build command. And we were actually injecting S3

credentials into the Docker image. Such confidential credentials provided while

building a Docker image can be inspected using docker history command by

anyone who has access to that Docker image.

Due to above reason, we needed to abandon this approach and look for another.

Speeding up "docker build" -- second attempt

In our second attempt, we decided to execute bundle install and

rake assets:precompile commands outside the docker build command. Outside

meaning the place to execute these commands was Jenkins build itself. So with

the new approach, we had to first execute bundle install and

rake assets:precompile commands as part of the Jenkins build and then execute

docker build as usual. With this approach, we could now avail the inter-build

caching benefits provided by Jenkins.

The prerequisite was to have all the necessary system packages installed on the Jenkins machine required by the gems enlisted in the application's Gemfile. We installed all the necessary system packages on our Jenkins server.

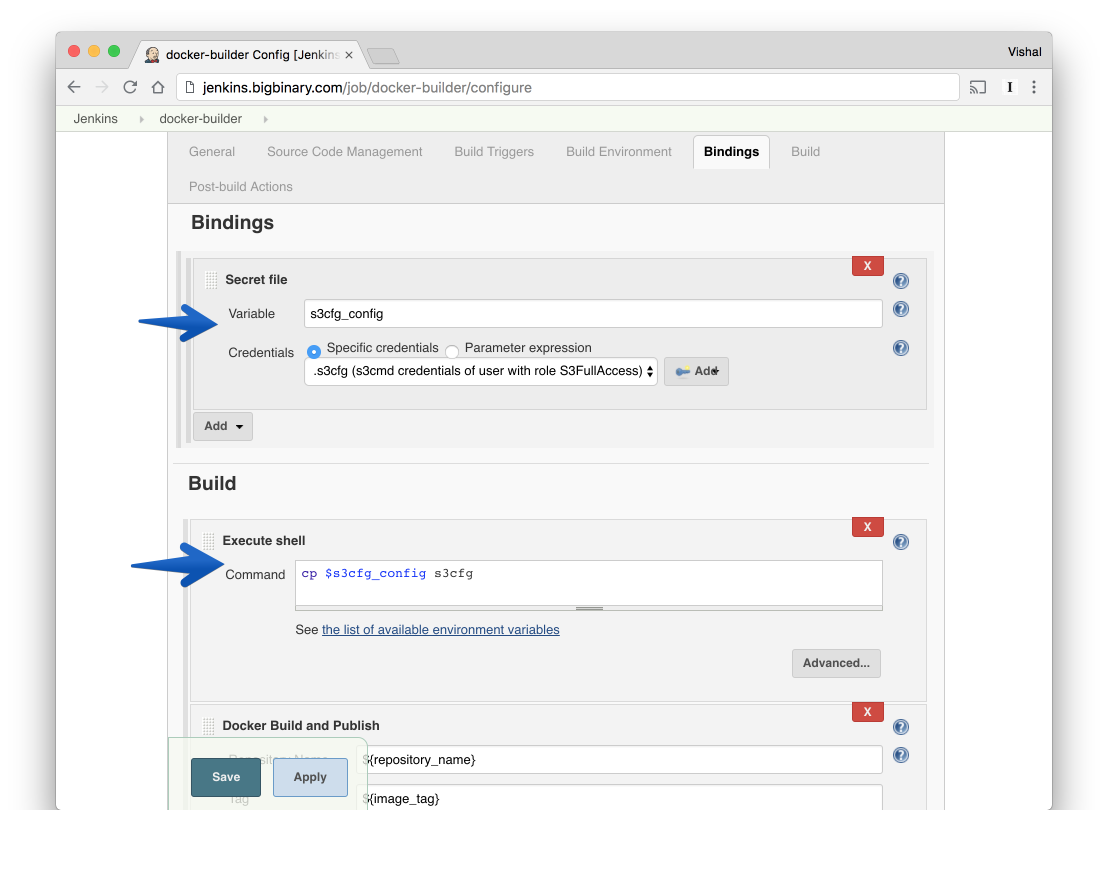

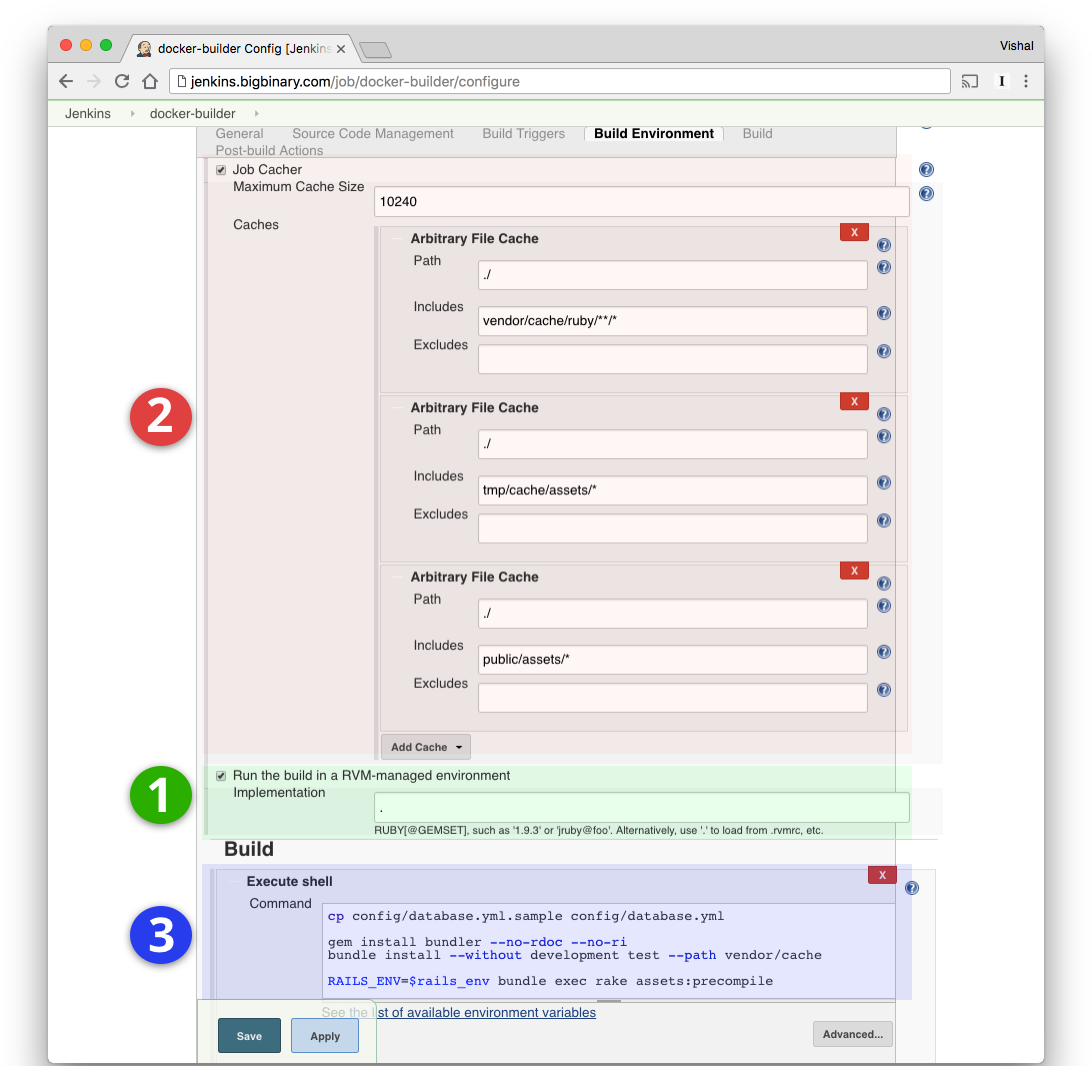

Following screenshot highlights the things that we needed to configure in our Jenkins job to make this approach work.

1. Running the Jenkins build in RVM managed environment with the specified Ruby version

Sometimes, we need to use different Ruby version as specified in the

.ruby-version in the cloned source code of the application. By default, the

bundle install command would install the gems for the system Ruby version

available on the Jenkins machine. This was not acceptable for us. Therefore, we

needed a way to execute the bundle install command in Jenkins build in an

isolated environment which could use the Ruby version specified in the

.ruby-version file instead of the default system Ruby version. To address

this, we used RVM plugin

for Jenkins. The RVM plugin enabled us to run the Jenkins build in an isolated

environment by using or installing the Ruby version specified in the

.ruby-version file. The section highlighted with green color in the above

screenshot shows the configuration required to enable this plugin.

2. Carrying cache files between Jenkins builds required to speed up "bundle install" and "rake assets:precompile" commands

We used Job Cacher

Jenkins plugin to persist and carry the cache directories such as

vendor/cache, tmp/cache/assets and public/assets between builds. At the

beginning of a Jenkins build just after cloning the source code of the

application, the Job Cacher plugin restores the previously cached version of

these directories into the current build. Similarly, before finishing a Jenkins

build, the Job Cacher plugin copies the current version of these directories at

/var/lib/jenkins/jobs/docker-builder/cache on the Jenkins machine which is

outside the workspace directory of the Jenkins job. The section highlighted with

red color in the above screenshot shows the necessary configuration required to

enable this plugin.

3. Executing the "bundle install" and "rake assets:precompile" commands before "docker build" command

Using the "Execute shell" build step provided by Jenkins, we execute

bundle install and rake assets:precompile commands just before the

docker build command invoked by the CloudBees Docker Build and Publish plugin.

Since the Job Cacher plugin already restores the version of vendor/cache,

tmp/cache/assets and public/assets directories from the previous build into

the current build, the bundle install and rake assets:precompile commands

reuses the cache and performs faster.

The updated Dockerfile has lesser number of instructions now.

FROM bigbinary/xyz-base:latest

ENV APP_PATH /data/app/

WORKDIR $APP_PATH

ADD . $APP_PATH

CMD ["bin/bundle", "exec", "puma"]

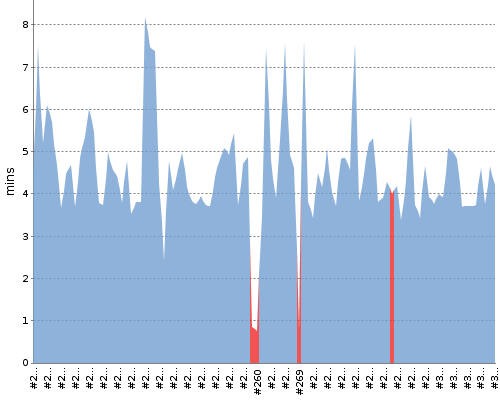

With this approach, average Jenkins build time is now between 3.5 to 4.5 minutes.

Following graph shows the build time trend of some of the recent builds on our Jenkins server.

Please note that the spikes in the above graphs shows that certain Jenkins builds took more than 5 minutes sometimes due to concurrently running builds at that time. Because our Jenkins server has a limited set of resources, concurrently running builds often run longer than estimated.

We are still looking to improve the containerization speed even more and still maintaining the image size small. Please let us know if there's anything else we can do to improve the containerization process.

Note that that our Jenkins server runs on the Ubuntu OS which is based on Debian. Our base Docker image is also based on Debian. Some of the gems in our Gemfile are native extensions written in C. The pre-installed gems on Jenkins machine have been working without any issues while running inside the Docker containers on Kubernetes. It may not work if both of the platforms are different since native extension gems installed on Jenkins host may fail to work inside the Docker container.

If this blog was helpful, check out our full blog archive.